Using transformers that power LLMs to model fMRI BOLD data

The deep learning architecture that is behind the success of large language models is called a Transformer [1], and the approach can be, in fact, applied to any sequential data, not necessarily text.

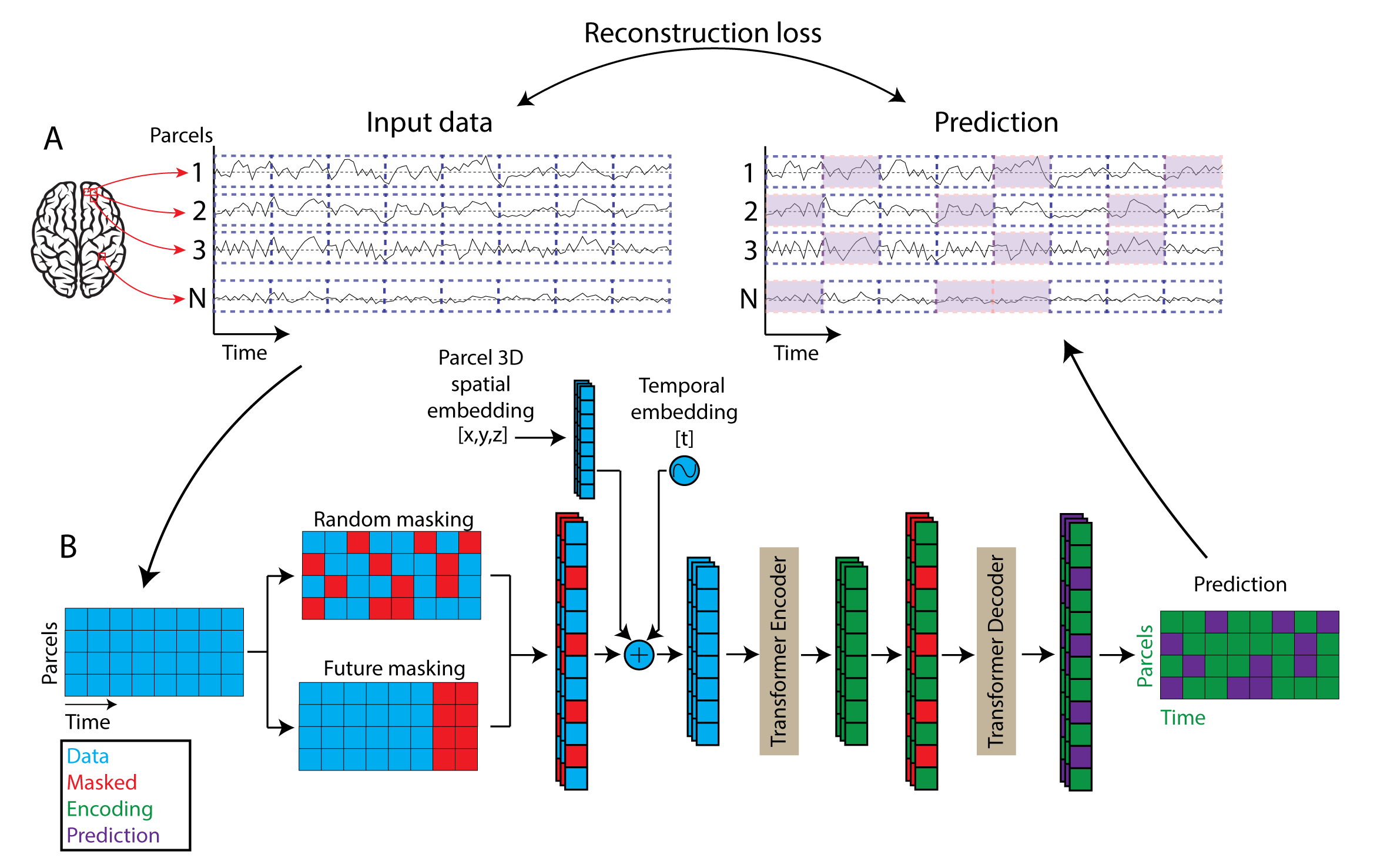

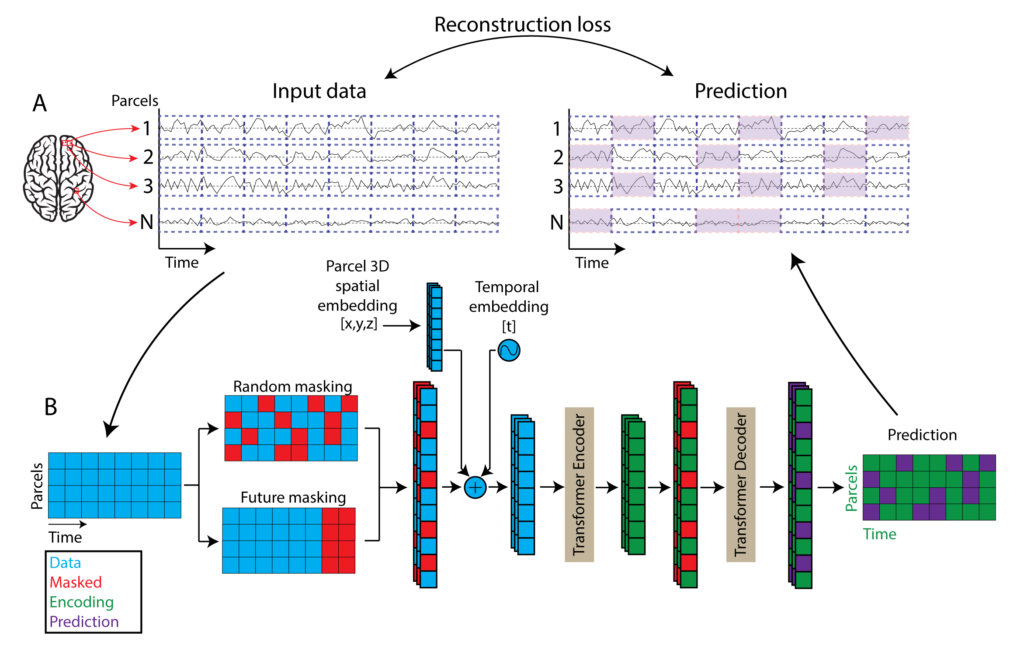

One such non-textual data that could greatly benefit from being modeled with transformer-based architecture is fMRI — by splitting the brain volume in parcels (functional areas according to an atlas) we obtain multiple BOLD time series running concurrently, treating it as a text sequence the transformer architecture allows us to capture both (a) dependencies within each sequence and (b) cross-parcel dependencies in the signal. This allows us to model BOLD signal as predicted from the activity than happened before it in a this particular parcel and, at the same time, how does the activity in the other brain regions affect the region we are trying to model.

By capturing and learning from all those interdependent signal sequences a transformer can learn a robust model of fMRI signal under various conditions, which can be then used to infer clinical conditions in new fMRI scans, detect anomalies, and predict numerous other data and meta characteristics.

Above is an illustration of a transformer architecture applied to fMRI signal reconstruction by BrainLM project [2].

References

[1] Vaswani, Ashish, et al. “Attention is all you need.” Advances in neural information processing systems 30 (2017).

[2] Ortega Caro, Josue, et al. “BrainLM: A foundation model for brain activity recordings.” bioRxiv (2023): 2023-09.

[3] Github: BrainLM

[4] Asadi, Nima, Ingrid R. Olson, and Zoran Obradovic. “A transformer model for learning spatiotemporal contextual representation in fMRI data.” Network Neuroscience 7.1 (2023): 22-47.

[5] Yoo, Shinjae, and Peter Kim. SwiFT: Swin 4D fMRI Transformer. No. BNL-224824-2023-COPA. Brookhaven National Laboratory (BNL), Upton, NY (United States), 2023.

[6] Malkiel, Itzik, et al. “Self-supervised transformers for fmri representation.” International Conference on Medical Imaging with Deep Learning. PMLR, 2022.

No comments yet.