Better BCI control by mapping mental state space with self-organising maps and EEG

Action-based brain-computer interfaces operate on the premise that when a participant thinks a specific thought, it creates a unique pattern of brain activity. This pattern is detected in real-time by a machine learning system, which then triggers the intended external action. However, the effectiveness of this approach relies on the assumption that individuals can consistently produce stable and recognizable mental states. This isn’t always the case; for instance, the same mental effort (like thinking about an apple) might result in significantly different neural activities on different days. Such variability poses a significant challenge for the machine learning system tasked with mapping neural activity to the desired actions.

In this project, we created a dialogue between the brain and the machine, where both the participant and the machine receive feedback on their performance. The machine provides the user with information about the consistency and distinctness of their thoughts, while the user adjusts their mental activity based on this feedback. Through this interactive process, both parties learn to better understand each other, iteratively converging on a set of mental actions that are consistent and recognisable.

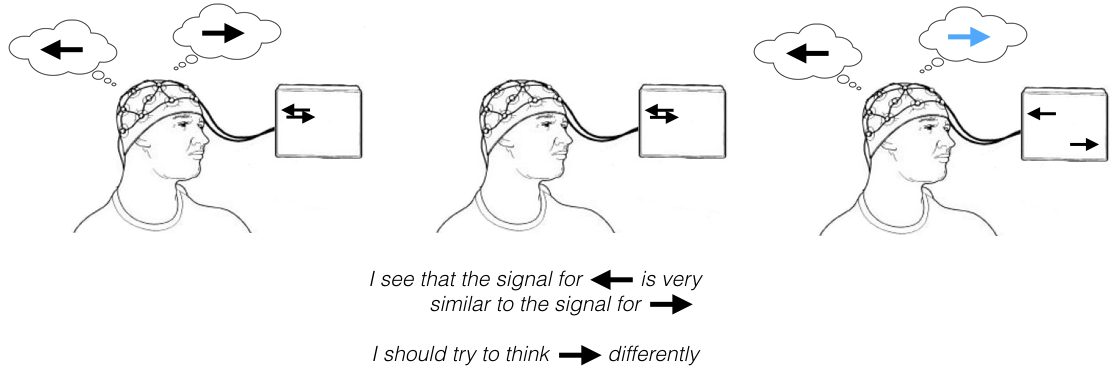

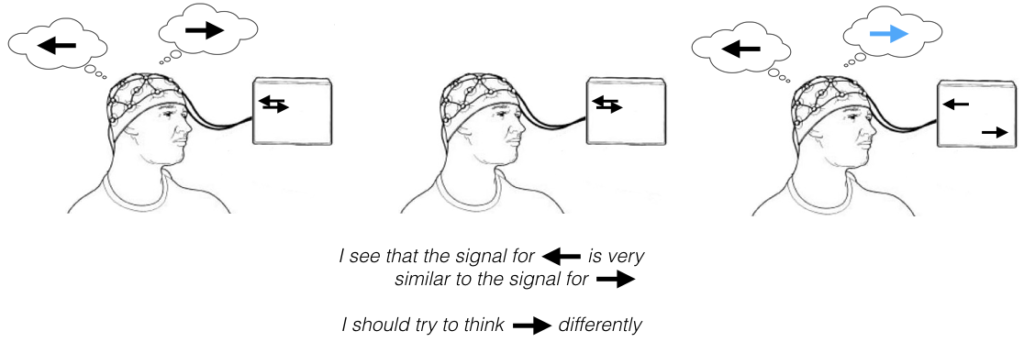

This figure illustrates the three stages of interaction between the participant and the BCI system. First, the participant’s mental state is captured by an EEG device and visualized on a 2D screen. Here, the participant observes that thinking ‘left’ (←) and ‘right’ (→) produces similar brain signals, resulting in overlapping positions on the screen. Second, realizing this, the participant considers altering their thought process for the ‘right’ (→) action. Finally, after several attempts, they identify a thought (or mental state) that generates a sufficiently distinct brain signal. This allows the machine to recognize it as different and map it to a unique area on the participant’s mental state diagram.

By repeating this process for as many mental actions as needed, the participant can find thoughts in their head that they can produce reliably and the machine can recognise. The underlying process is build on Self-Organising Maps (see the reference for more details) and a real-time visualisation:

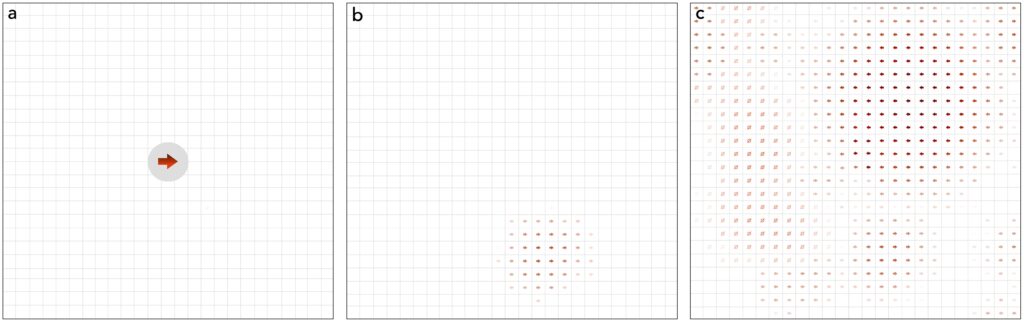

In these three snapshots of the interface, we observe distinct stages: (a) During training, the system prompts the user to think “right,” or to identify a mental action they associate with the “right” action. (b) The user’s brain signal is displayed in real time as a 2D projection, with a specific area indicating the mental pattern being generated, marked by its unique position on the map. (c) Later in the training, the same map now displays all three actions the user has taught the system: left, nothing, and right. We can observe how the machine has successfully assigned each action to its own distinct, non-overlapping area on the map.

The system is now ready to accept mental commands from the user, and we can confidently anticipate high precision in recognition. This confidence stems from the validation during the training process, which confirmed that these thoughts are recognizable and that the user can consistently generate them.

References

Kuzovkin, Ilya, Konstantin Tretyakov, Andero Uusberg, and Raul Vicente. “Mental state space visualization for interactive modeling of personalized BCI control strategies.” Journal of Neural Engineering 17, no. 1 (2020): 016059. https://iopscience.iop.org/article/10.1088/1741-2552/ab6d0b

No comments yet.